Deloitte will issue a partial refund to the federal government after they admitted that they used AI in a $440,000 report. Why is this an issue? Because the AI had “hallucinated” references and citations that didn’t exist. When an AI hallucinates, it generates an answer that isn’t true, guesses an answer or tries to fill in gaps. It does this because a generative AI’s purpose is to give you what you ask for, not necessarily to give you what’s factually true. This case is a great example of what can happen if AI is being used unchecked at work. Is your business facing a similar risk? In this article, we’ll discuss what employers need to know about using AI at work, and how they can protect themselves from pitfalls.

Why is AI at work a risk?

Artificial intelligence offers incredible potential for boosting productivity, automating repetitive tasks, and uncovering new insights from data. Employees are already using it to draft emails, summarise long documents, and write code. However, this powerful technology comes with a new set of risks that businesses cannot afford to ignore.

The core issue stems from how generative AI models, like the one used in the Deloitte case, operate. They are designed to predict the next most likely word in a sequence, creating fluent and convincing text. They are not databases of fact. This means they can confidently present incorrect information, invent sources, and produce biased or flawed output. Without human oversight and critical evaluation, relying on this output can lead to significant errors.

Furthermore, the use of public AI tools introduces security vulnerabilities. When employees input sensitive company data – such as financial reports, customer lists, or proprietary code – into a public AI platform, that information can be used to train the model. This means your confidential data could potentially be exposed to other users, creating a serious data breach. The convenience of AI can easily overshadow the fundamental need for data security and accuracy, creating a landscape of hidden risks for uninformed businesses.

What are the consequences of using AI unchecked?

Reputational damage

Trust is a cornerstone of any successful business. If your company produces a report with fabricated data or releases a marketing campaign with AI-generated biases, the damage to your reputation can be swift and severe. Customers, partners, and the public expect accuracy and integrity. An AI-driven error can make your organisation appear incompetent or untrustworthy, an image that is difficult to repair.

Financial loss

The financial impact can be immediate. As seen with Deloitte, mistakes can lead to demands for refunds on work that was not up to standard. The cost of rectifying AI-generated errors, whether it’s redoing a project, managing a public relations crisis, or dealing with legal challenges, can be substantial. In a competitive market, losing a contract or a client due to a preventable AI mistake can have lasting financial repercussions.

Data breaches

Perhaps one of the most severe risks is the potential for a data breach. Inputting sensitive or confidential information into unsecured, public AI tools can be equivalent to posting it on a public forum. This can lead to the loss of trade secrets, intellectual property, and customer data. Not to mention the loss of customer trust that follows such a breach.

How can businesses prevent these risks?

The goal is not to ban AI, but to manage it. Implementing a structured framework for AI governance is the most effective way to harness its benefits while mitigating its risks. One of the leading global standards for this is ISO 42001.

Understanding ISO 42001

ISO 42001 is the world’s first international standard for Artificial Intelligence Management Systems (AIMS). It provides organisations with a structured framework to govern the development, deployment, and use of AI in a responsible manner. Think of it as a quality management system, like ISO 9001, but specifically tailored for the unique challenges of AI.

The standard is designed to help businesses address ethical considerations, transparency, accountability, and security related to AI. By adopting this framework, you can demonstrate to stakeholders, customers, and regulators that you’re committed to managing AI responsibly.

The structure of ISO 42001

ISO 42001 follows the same high-level structure as other ISO management system standards, making it easier to integrate with existing systems like ISO 27001 (Information Security). Its key components guide an organisation through:

- Understanding the organisation and its context: identifying internal and external issues relevant to your AI systems.

- Leadership and commitment: ensuring that top management is accountable for the AI management system.

- Planning: setting AI objectives and planning actions to address risks and opportunities. This includes conducting AI impact assessments.

- Support: allocating resources, ensuring competence, and managing communication and documentation.

- Operation: implementing the processes and controls for the entire AI system lifecycle, from design and development to deployment and monitoring.

- Performance evaluation: monitoring, measuring, and evaluating the AI management system’s effectiveness.

- Improvement: continuously improving the system based on performance feedback.

How ISO 42001 works in practice

Implementing ISO 42001 involves establishing clear policies and processes for AI usage. This framework forces your organisation to ask critical questions. Who’s accountable if an AI system makes a mistake? How will we ensure the data used to train our AI models is accurate and unbiased? How will we inform client’s that they’re interacting with an AI, and should we not use AI in certain situations? By systematically addressing these questions, you build a robust governance structure that turns AI from a potential liability into a controlled and valuable asset.

Don’t ban AI, govern it.

The case of Deloitte and the AI-generated report serves as a timely warning. Artificial intelligence is a transformative technology, but its power must be wielded with caution and foresight. Simply allowing unchecked AI usage is an invitation for financial loss, reputational harm, and serious security breaches.

The path forward is not to fear or forbid AI, but to govern it. By implementing a clear framework like ISO 42001, your business can establish the necessary guardrails for responsible AI integration.

We can help get your ISO Certification journey started

Complimentary online training for all clients: we offer complimentary online training courses for our clients that can be accessed by your entire organisation – it’s the best way to gain confidence and knowledge and help you prepare for your audit.

Partner with us to get your business to higher standards: with 30 years of experience, Citation Certification has partnered with thousands of organisations on their certification journey.

Lean on us to access our expertise: feel at ease knowing that our auditing team is supportive, friendly and personable people who are passionate about high standards. They’re locally based and dedicated to delivering high-quality customer care. Have a question or need some guidance on a standard? We’re always available to answer any questions you have. Contact us here.

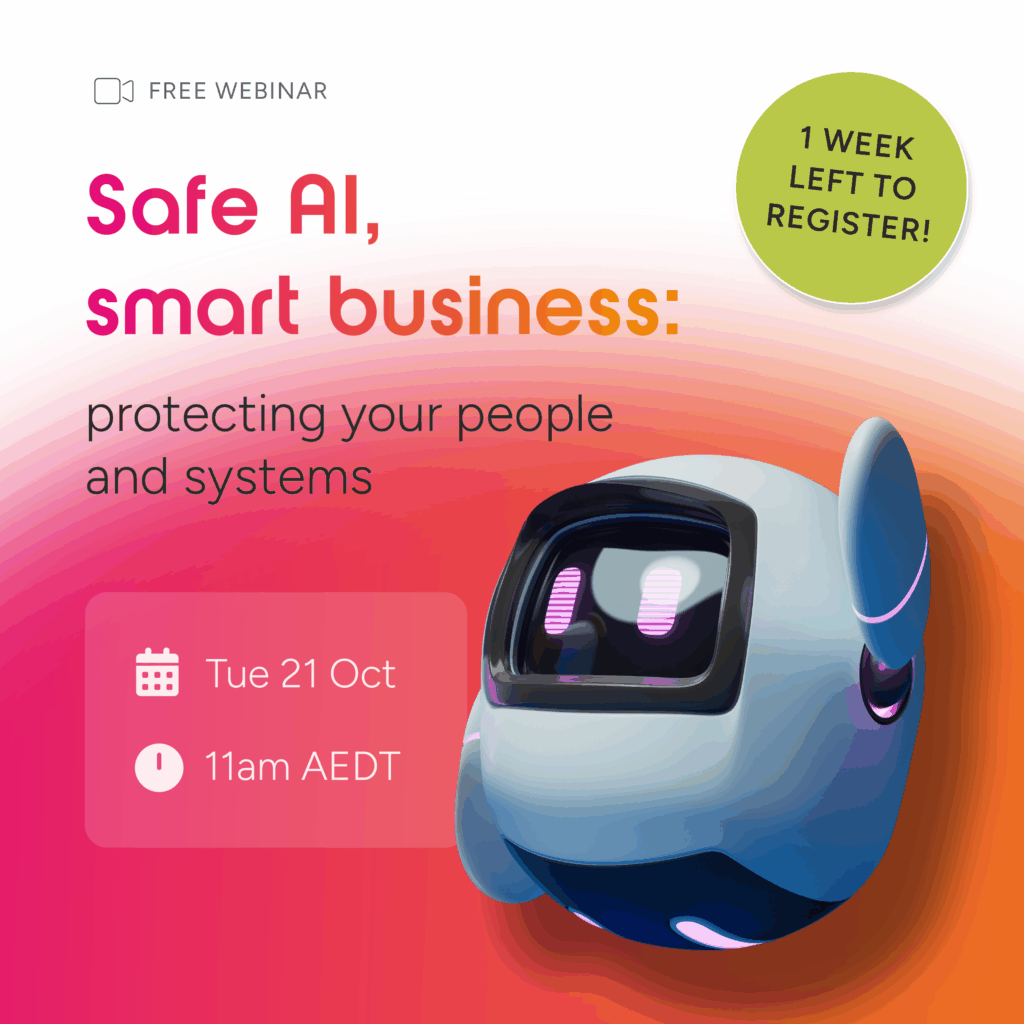

Want to learn more?

We’re hosting a webinar all about how to prevent AI risks, you can register below.